This post was prepared with reviews, discussion and insights from @polar and @rafa

Introduction

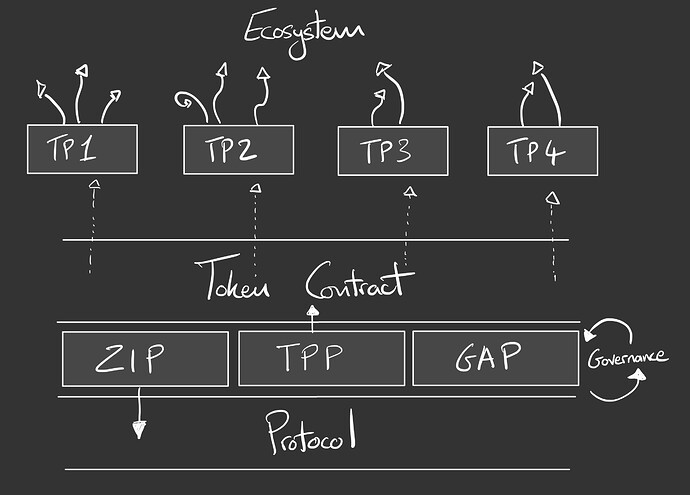

The ZKSync governance design is different from other protocol governance systems in the wider crypto ecosystem. It is leveraging an innovative approach to funding activity across the ecosystem through the token contract directly, using Token Programmes Proposals (TPPs).

In theory, these Token Programmes can be fully deployed directly from the governor contracts, giving minting rights directly from the ZK token contract in the form of capped minters, thereby creating “permissionless pathways”.

This post proposes a way of thinking about these Token Programmes that leads to the minimal, mechanistic and efficient use of the delegate-driven global consensus. Our goal is to create the cryptoeconomic context for an ecosystem to flourish by extending this mechanic towards a true cybernetic governance framework.

The Governance Proposal Structure

There is a developing wider discussion on the nature and purpose of the ZKSync governance systems, in The Telos of the DAO discussion. However, this is a discussion located explicitly around the nature of the proposal structures and how TPPs can take us towards a self-regulating cybernetic system design.

ZIPs: These are proposals that materially shape the protocol. They involve high-stakes decisions and are best conceptualized as the protocol upgrade mechanism. A key affordance of decentrailsed governance systems is that they allow systems to evolve technologically (rather than static ossified systems), which in the case of the ZKSync ecosystem, will be important as it pushes into the frontier of research and development at the frontier of blockchain architecture.

TPPs: is what we’re primarily focussing on here. They are intended to move beyond the standard grant mechanism structure towards a more mechanistic design space, where tokens are flowed to mechanisms that distribute tokens for a clearly designed purpose. Their goal is to lead to emergent ecosystem outcomes driven by pure incentives and flows in the token economy.

GAPs: governance is the act of governing; it is an implicitly cyclical and self-referential process that requires evolutionary action. GAPs are designed to upgrade the governance processes themselves, sometimes referred to as metagovernance. GAPs unlike off-chain voting systems used elsewhere are fully onchain with their own governor contract, which means this mechanism can be used for both signaling and triggering onchain actions, which also gives this pathway the potential to play into token programme operation. I also see no reason here that GAPs couldn’t be used to add entirely new proposal pathways e.g. futarchy based decision making at some point in the future.

Token Programmes

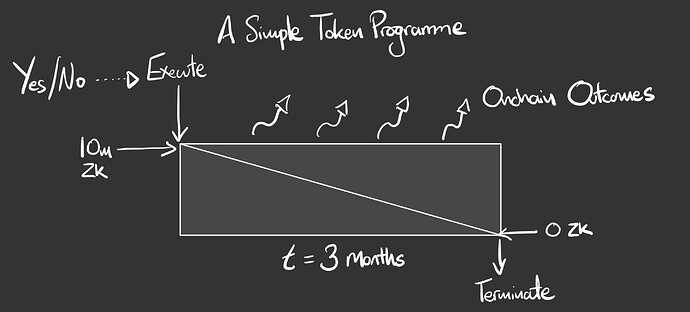

Let us consider an elementally simple Token Programme.

In this example we see the lifecycle of a TPP. A governance proposal is passed to execute the deployment of a smart contract which is capitalised with 10m tokens via a capped minter. The tokens are emitted linearly block-by-block for a defined period (in this case 3 months) until depleted, leading to onchain outcomes. It then self-terminates due to hitting its minting cap. A simple example of this, could be a simple LP token staking mechanism for incentivising liquidity in a major trading pair.

The decision here would be a simple Yes/No binary decision to execute the programme at the parameters defined in the proposal and transaction payload in the vote, which would be deliberated in the lead-up to a delegate pushing the proposal for vote.

In theory the mechanism here could be deployed with end-to-end trustless execution and no intermediating action whatsoever (a permissionless pathway). This is the goal, but we can go beyond singular mechanisms to a wider programme.

TPP Decision Making

If we follow this idea out to a more extensive programme of action, we may of course wish to continue with the programme if it leads to high Value and productive onchain outcomes.

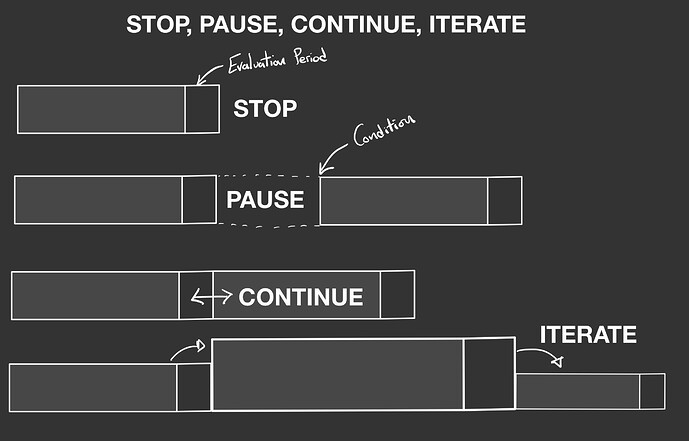

We therefore propose a simple framework for TPP decision-making, with the intention of building a coherent pipeline of deliberation that can scale effectively without spiraling into bureaucratic decay.

To promote feedback-driven-decision making the programme has an evaluation period at some point T minus PE (programme end), to facilitate a set of mechanistic decisions (for example, a 3 month token programme, 2 week eval period).

STOP: This is simply a case of the minter cap being hit, causing the programme to self-terminate, with no further action necessary. This could be extended to KILL (perhaps through an escalation game) if a mid-programme mechanism is leading to obvious negative outcomes.

PAUSE: the evaluation period determines that the programme could perform well if a set of conditions are hit. These could be done by a delegate vote, but could move out to oracle defined conditions such as the ZK token price, or once an FDV threshold or volume stat is hit. In which case the programme can restart using the same parameters as previously defined.

CONTINUE: a simple decision to maintain continuity in the programme by issuing another capped minter in the same parameters as the previous step in the programme.

ITERATE: a parameter change of the mechanism within the programme, for example more tokens, or less tokens and a shorter programme epoch.

A simple decision framework like this will allow TPPs to be chained in sequence and structured in a way that doesn’t overload the delegate governance layer with voting or deliberative actions. The baked-in evaluation periods leads us to self-regulating feedback loops, a key feature of cybernetic governance.

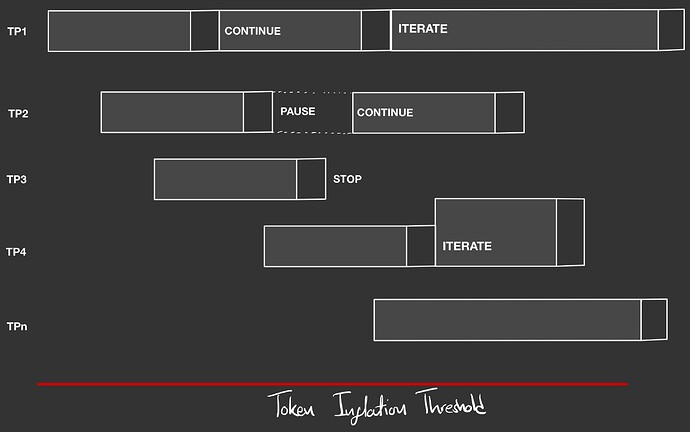

The above diagram shows a chained sequence of TPPs structured in a fashion so that at no point the delegates needs to focus on more than one decision or evaluation period at any one time. Allowing due consideration and structured decision making periods, promoting the creation of temporal schelling points, increasing the probability of delegate coordination and engagement.

For example these timing structures can be set up at proposal time to sequence decision making periods in the last week of the month (for example), with researchers coordinating in the weeks before to deliver the necessary information to delegates to make effective data informed decisions.

Global Parameters

The GAP system has the potential to set the meta-rules for how token programmes play out as a wider framework, setting system boundaries. For example, setting the cadence of token programmes, evaluation schema and cryptoeconomic boundaries.

To illustrate the benefit of this, setting something like a Token Inflation Threshold as a global parameter adds bounds to the wider token programme framework in terms of how many ZK can make it to market in a given time frame. This will provide assurances to token holders that the ZK will not be overspent and will facilitate sophisticated market actors to build predictive models of the economy. It will also allow delegates to deliberate on whether a particular TPP is worth using X% of the remaining allocation and will promote healthy competition between token programmes.

This kind of framework will simplify the decisions required for delegates to make, allows a structure to form that is easy to map and leads towards an important set of characteristics that can lead towards far more optimal governance action than is convention.

Programmes of Pluralistic Mechanisms

At this stage it is wise to deliberate the nature of the mechanisms we would like to see in a wider token programme framework that effectively steers builders towards desired network outcomes in alignment with the ZK Credo.

This could be the context where the “infinite garden” ethos of Ethereum could be brought to life through the cultivation and curation of a vibrant ecosystem of competing mechanisms. Building resilience through diversity and creating a context where mechanisms are iterated rather than tried and abandoned.

A suggested list of features we might be looking for:

-

End-to-End Trustless Execution: No manual intervention is required once the programme begins. It runs entirely through smart contracts, from vote to execution to end of the programme.

-

Single Vote Triggers Multiple Outcomes: A single governance decision is enough to initiate a cascade of on-chain actions. It will be possible for a single vote payload to execute many instances of a Token Programme. For example, not one, but several instances of the simple token programme shown in the first image.

-

Pipes, Not Buckets: The programme’s design ensures a smooth flow of tokens and decisions, rather than static allocations. This moves us away from using global consensus to deliberate complex and often messy grant-like decisions.

-

Decentralised Decision Making: Outcomes are decided in a decentralized manner, relying on collective decision-making rather than central authority.

-

Radical Openness: Transparency is key, ensuring all stakeholders understand how the programme functions and that every material action is auditable. If all relevant data is onchain and open, we maximally eliminate the potential for collusion and shadow structures to emerge.

-

Evolutionary: Programmes are designed to adapt over time, iterating based on performance data and alignment with governance agreed KPIs. This opens the potential for innovative evolutionary mechanism designs to emerge.

-

Steerable: Minimal interventions are allowed but only when necessary, prioritizing dials (adjustments) over dialogue. Inter-program steering could be possible through sub-structures, leveraging systems like Hats protocol and transparent voting mechanisms.

-

Leverage Account Abstraction: One of ZKSync’s core strengths is native account abstraction. Token programmes could seed innovation in the utilisation of this groundbreaking technological affordance showcasing forkable mechanisms that can be deployed in wider ecosystem.

Maturing Execution

It should be said that this is a new paradigm of thinking about how protocol governance systems operate and it will be non-trivial to realize full permissionless pathways immediately.

A maturation flow could be:

- Capped minters + multisig with accountability frameworks.

- Capped minters + discrete token emission mechanisms.

- Capped minters + logic and proof gating / Account abstraction integration / multi-programme factory contract execution

- Composible cross-interacting token programme designs and emergent governance driven by ecosystem wide feedback.

This attitude will move us away from the flawed paradigm of “progressive decentralization” towards progressive automation, allowing emergent complex behavior and ‘living’ ecosystem diversity.

The path towards a true cybernetic governance framework

The dream for DAOs is not to recreate the old world in the new, but to iterate towards fully automated permissionless systems that evolve through self-regulation and data informed decision making.

Blockchain based decentralised governance systems are the site for where this kind of system can evolve. The token programme framework in particular creates the perfect context for it to emerge.

By pushing humans to the edges, we open up the possibility for end-to-end trustless execution, which will minimise the potential for collusion, decision-making paralysis and doom loops of overspending.

Additionally, these systems will allow us to move from Human in the Loop systems to AI-based agentic decision making and the end game of a decentralised self-regulating cybernetic organism that powers ecosystem growth.

Summary

The ZKSync governance system as it is structured currently limits the paths towards conventional grant funding, and that is a good thing.

It opens up the possibility for a more trust minimised and eventually fully permissionless pathways leveraging the ZK token. By building out a highly predictable, maximally simplistic framework of decision-making these onchain mechanisms can become data directed and ultimately fully autonomous. This will free up culture formation to take place in the ecosystem itself (supported by TPPs) rather than at the global consensus layer.

Open questions and prompts for discussion:

- How do we cultivate a highly pluralistic and competitive pipeline of token programmes that not only overcomes adoption barriers but encourages continuous innovation within the ecosystem without excessive reliance on governance?

- What foundational set of global parameters should we set to balance token stability, ecosystem growth and innovation within a meta-level token programme?

- What technical standards and interfaces are required to facilitate maturity towards trustless execution?

- What are the first set of token programmes we should be looking for?

- What kind of on-chain metrics, KPIs and oracles are required to enhance feedback loops and adaptive programme adjustments?

- How do we move from Human in the Loop to Agent in the Loop, and perhaps a Chain in the Loop end game?

- How can this system nurture values-aligned culture formation in the wider ecosystem?